PRACTICAL AI Marketing Solutions

Ready to work together?

Find out where AI can add measurable value to your marketing engine and fuel confident growth.

Most marketing teams are still treating AI like a smarter intern. Write a better prompt, get a better output, repeat. But something more disruptive is happening beneath the surface. With models like Claude Opus 4.6, the shift is no longer about generating words faster, it’s about handing real work over to systems that can plan, coordinate, and execute. Once that clicks, a lot of familiar SaaS tools start to look less like leverage and more like legacy process. This article is about that shift, and why “vibe working” is quietly becoming the new default.

Andy Mills

06/02/2026

Something uncomfortable is happening in software right now.

The WisdomTree Cloud Computing Fund is down more than 20 percent year to date. That is not a blip. That is investors quietly admitting that a lot of “defensible” SaaS was never that defensible in the first place.

What is eating away at those moats is not another shiny AI feature bolted onto an old product. It is a deeper shift in how work actually gets done.

Claude Opus 4.6 makes this painfully clear.

We are moving from talking to AI to handing work over. And once you cross that line, a lot of your existing SaaS stack starts to look like expensive friction.

For marketing teams, this is not a tooling upgrade. It is an operating model change.

Let’s start with a quiet truth.

Most marketers are not bad at AI because they cannot write prompts. They are bad at AI because the workflow itself is broken.

Until now, AI adoption has looked like this:

Write a prompt to draft something

Write another prompt to analyse data

Write another prompt to format it

Move everything between three tools

Repeat

That is not leverage. That is just faster busywork.

Claude Opus 4.6 kills this model by changing how work is executed, not just how answers are generated.

One of the most important additions in Claude Opus 4.6 is agent teams, currently available as a research preview inside Claude Code.

This is not a single assistant waiting for instructions. It is a group of specialised agents working in parallel.

Instead of one model plodding through a checklist, you get a team that:

Splits complex work into independent chunks

Shares context automatically

Flags blockers without being asked

Coordinates like a high-performing department

This matters more than it sounds.

For a CMO, the job stops being “manage five tools and twenty prompts” and becomes “brief a team once and review the output”.

Scott White from Anthropic put it well when he explained that these agents work faster by mimicking how real teams operate. Parallel effort beats linear effort every time.

Right now, this is strongest in read-heavy workflows like code reviews and research. But the direction is obvious.

AI is no longer a tool you use. It is a function you manage.

.png)

You have probably heard the term vibe coding. Non-technical people shipping real software by describing what they want, not how to build it.

That idea is now escaping engineering.

We are entering the era of vibe working.

“I think we are now transitioning almost into vibe working.”

Scott White, Head of Product for Enterprise, Anthropic

Vibe working means you start with intent, not instructions.

A messy brainstorm. A raw CSV export. Half-formed ideas in a Notion doc.

Instead of turning that into ten micro-tasks for junior staff, the model infers structure, creates output, and fills in the gaps in one go.

This quietly kills an entire category of work.

The junior analyst whose job was slide-decking, formatting, and basic synthesis is being automated out, not because AI is “better”, but because the work itself never required human judgement in the first place.

.png)

The most telling part of Claude 4.6 is not the chat interface. It is the deep integration with Excel and PowerPoint.

In Excel, the model plans its steps before touching the data. It does not just transform rows. It reasons about the transformation.

In PowerPoint, it goes further.

It reads slide masters

Understands brand layouts

Matches fonts and structure

Outputs decks that look finished, not drafted

This is important.

For years, the defence of bloated SaaS stacks was that “execution still takes time”. That formatting, compliance, and brand alignment created necessary friction.

That friction is disappearing.

When AI understands your templates better than your team, the template itself stops being a job.

One of the smartest ideas in Opus 4.6 is also one most teams will underuse.

The /effort parameter.

This is effectively a dimmer switch for intelligence. And it matters because not every task deserves deep reasoning.

You get four levels:

Low for speed and cheap tasks like copy tweaks or summaries

Medium for routine professional work

High, the default, for most knowledge tasks

Max for genuinely complex analysis

Why does this matter?

Because overthinking costs money.

Letting a deep reasoning model burn cycles on trivial work is like paying a senior strategist to rename files. It feels sophisticated but it is inefficient.

Teams that learn to dial intelligence up and down will move faster and protect their budgets. Everyone else will complain that AI is “too slow” or “too expensive”.

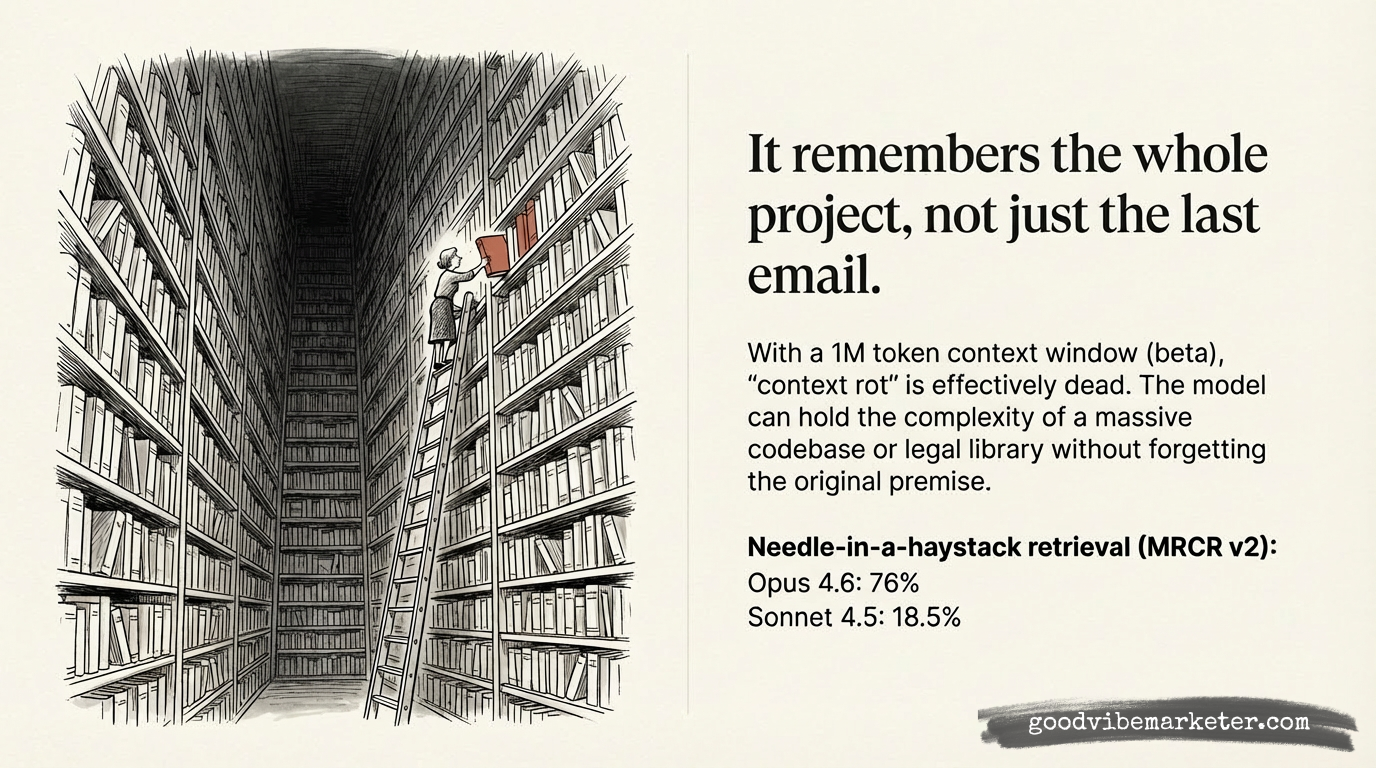

Long-running AI projects have always had a problem.

Context rot.

As conversations grow, the model forgets constraints. Brand rules drift. The brief gets fuzzy. Outputs slowly degrade.

Claude 4.6 tackles this head on with two things.

A one million token context window

Context compaction

Instead of hitting a hard limit and losing memory, older context is summarised and compressed while preserving intent.

The result is not theoretical.

On the MRCR v2 needle-in-a-haystack benchmark, which tests whether a model can find a specific fact buried in huge data sets, Opus 4.6 scored 76 percent. Claude Sonnet 4.5 managed 18.5 percent.

For marketers, this means something practical.

You can now give the model:

A year of campaign data

Competitor analysis

Brand guidelines

Previous outputs

And expect it to stay on brief.

That unlocks long-horizon collaboration instead of disposable chats.

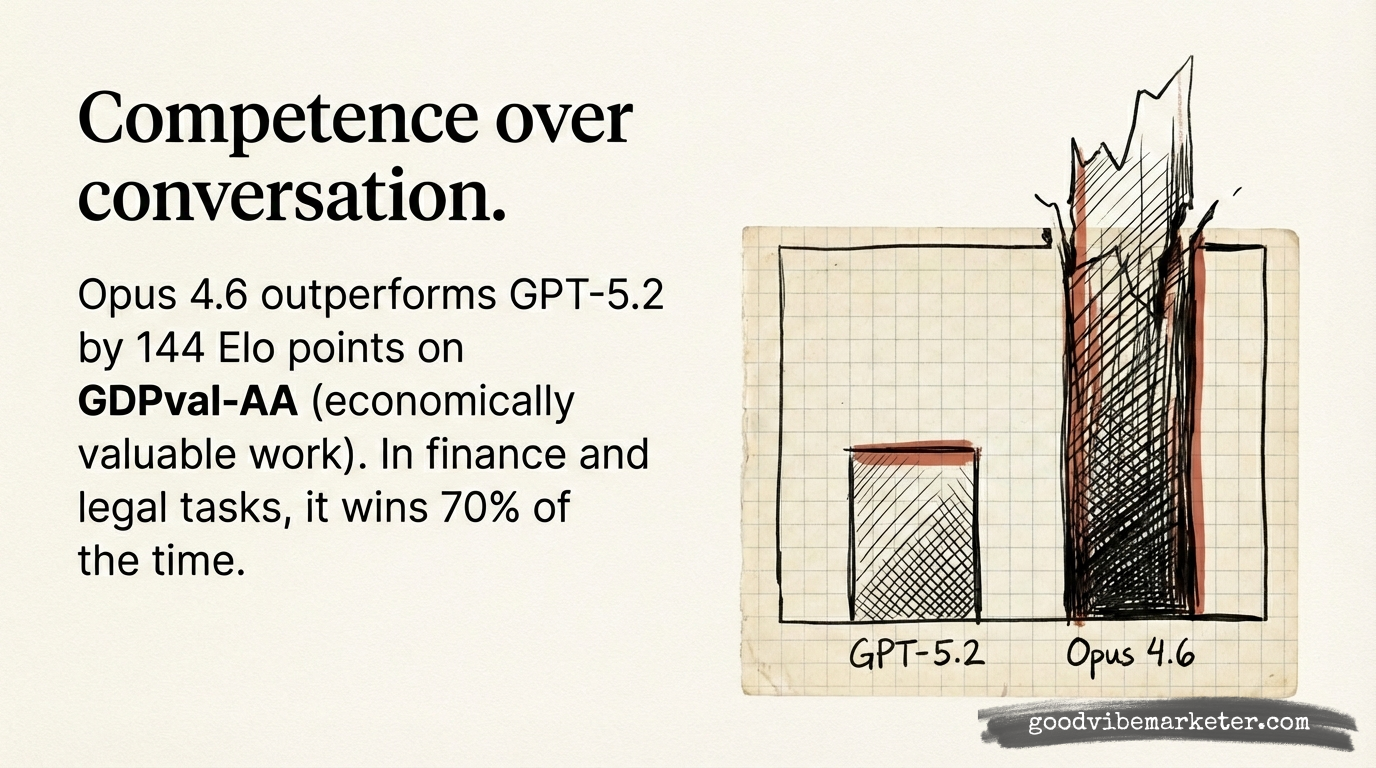

Big context is useless if reasoning is weak.

This is where Opus 4.6 quietly resets expectations.

On the GDPval-AA benchmark, which measures economically valuable knowledge work in domains like finance and law, Opus 4.6 beat GPT-5.2 by 144 Elo points.

That translates to winning around 70 percent of head-to-head professional tasks.

This is not about trivia or maths puzzles. It is about judgement, synthesis, and decision support.

The model is moving out of the “test passing” phase and into work that actually carries economic weight.

For marketing leaders, that means AI outputs are no longer just drafts. They are inputs into real decisions.

Here is the uncomfortable implication.

If a single agentic system can research, analyse, reason, and produce brand-compliant output, a lot of SaaS tools stop earning their seat at the table.

Not because they are bad products.

Because they solve problems that no longer exist.

What used to require five tools, three handoffs, and endless coordination can now be handled by one well-briefed system.

That does not mean all SaaS disappears. It means the bar for “must-have” rises sharply.

Anything that adds friction instead of leverage is at risk.

Claude Opus 4.6 marks a real shift.

We are moving away from AI as a typing assistant and towards AI as a collaborator that can own chunks of work end to end.

The winning teams will not be the ones with the best prompts.

They will be the ones who:

Design work around agent teams

Reduce unnecessary tools

Brief clearly instead of micromanaging

Treat AI like a function, not a feature

Vibe working is not a gimmick. It is an operational necessity.

The only real question is whether your organisation adapts deliberately, or waits until half its stack quietly becomes legacy friction.

And by then, the decision might already have been made for you.