PRACTICAL AI Marketing Solutions

Ready to work together?

Find out where AI can add measurable value to your marketing engine and fuel confident growth.

Why the rush towards “agentic” AI assistants like Moltbot could be creating more risk than reward for marketers: beneath the promise of hands-free productivity sits a fragile security model that quietly hands over system-level access, credentials, and trust to tools that are always on, always reading, and easy to exploit. The article argues that today’s Jarvis-style assistants are less magic helper and more digital Trojan Horse and that smart teams should be thinking about blast radius, least-privilege design, and reputational risk before letting an AI run loose inside their workflows.

Andy Mills

.png)

THE JARVIS DELUSION

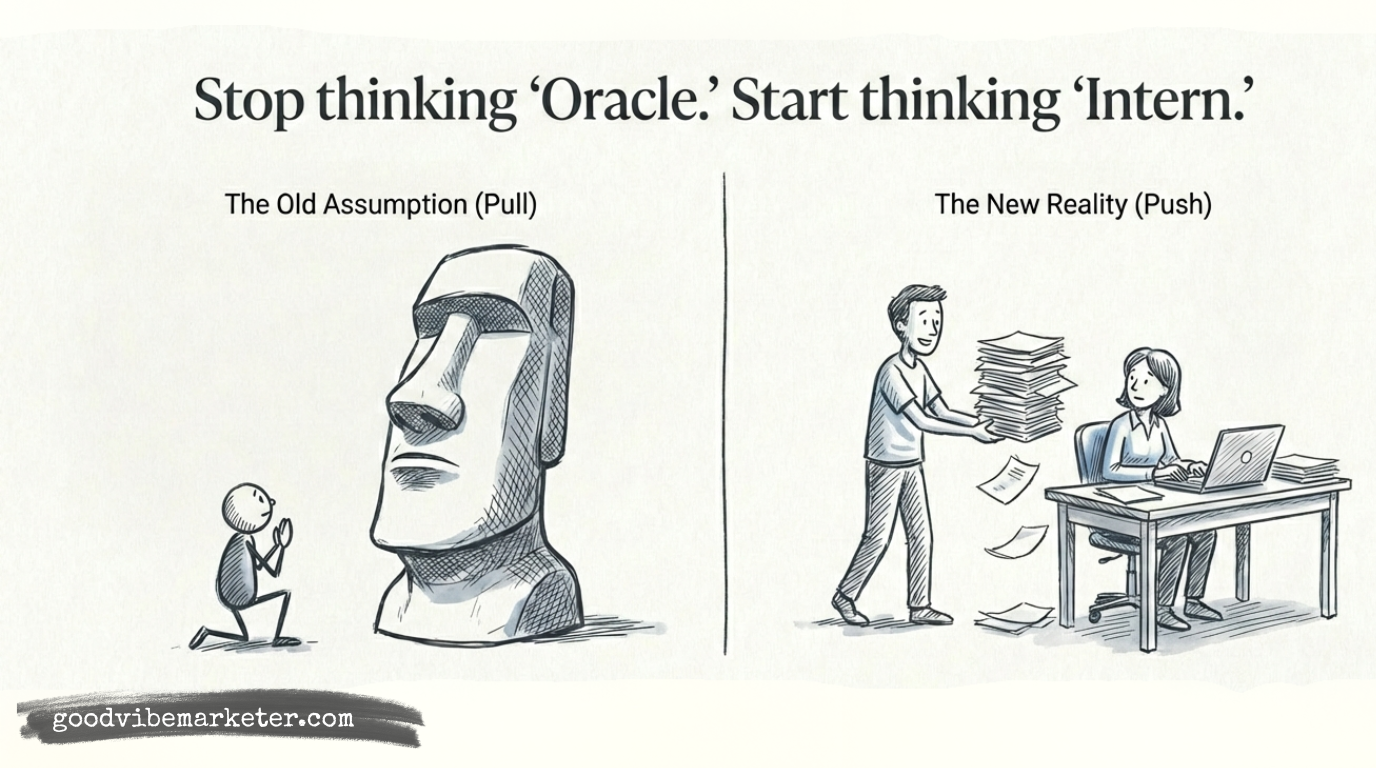

The marketing world is currently gripped by a feverish obsession with "Agentic AI," chasing the dream of a "Jarvis-like" assistant that finally moves beyond simple chat to autonomous execution. Into this void stepped Clawdbot (now rebranded as Moltbot), an open-source sensation with over 69,000 GitHub stars that promises to be the "AI that actually does things." However, as growth leads and CMOs rush to optimise their bespoke workflows with this viral tool, they must realise that the very features causing the hype are currently giving security experts a collective breakdown. What looks like the ultimate productivity hack is, in its current state, a "shell-less" lobster, vulnerable, exposed, and potentially a wide-open back door into your corporate infrastructure.

"ACTUALLY DOING THINGS" MEANS ACTUALLY GIVING AWAY THE KEYS

The core appeal of Moltbot lies in its ability to function where you already communicate, WhatsApp, Telegram, and Slack, while managing your calendar, emails, and even flight check-ins. The hype is so palpable that Best Buy reportedly sold out of Mac Minis in San Francisco as users scrambled to set up "always-on" personal servers. But for a bot to "do things" autonomously, it requires more than just a conversation; it requires system-level access and your stored account credentials.

To gain this convenience, users are essentially handing over the "keys to their identity kingdom." You cannot have an AI that triages your inbox or manages your bank-linked apps without granting it the power to potentially leak that entire ecosystem.

"‘Actually doing things’ means ‘can execute arbitrary commands on your computer.’" , Rahul Sood, CEO and co-founder of Irreverent Labs.

For strategic leaders, the "so what" is clear: agency is a double-edged sword. Palo Alto Networks has already labelled AI agents as the "insider threat of 2026." True autonomy turns a helpful assistant into a high-privilege user that bypasses traditional human-in-the-loop safeguards.

THE "LOCAL-FIRST" SECURITY MYTH

A major selling point for the prosumer crowd is that Moltbot runs locally, supposedly creating a secure "moat" around data. However, findings from researchers at Hudson Rock and Jamieson O’Reilly (Dvuln) have debunked this assumption.

Local Hosting Does Not Equal Data Security Moltbot has been found to store sensitive secrets, including API keys and credentials, in plaintext Markdown and JSON files on the local filesystem. This creates what Eric Schwake of Salt Security calls a "Visibility Void," where users share corporate tokens with a system they cannot easily audit. If the host machine is infected with common infostealer malware, specifically strains like Redline, Lumma, or Vidar, these plaintext secrets are a trivial harvest. A Mac Mini sitting in the corner of an office, if misconfigured or exposed to the web, becomes an unmonitored gateway for supply chain contamination.

THE CHAOTIC REBRAND: A LESSON IN MODERN IP SURVIVAL

The transition from Clawdbot to Moltbot serves as a cautionary tale regarding the volatility of the AI ecosystem. Following a trademark furore with Anthropic, the developer, Peter Steinberger, an Austrian founder who sold his previous firm for $119 million, underwent a forced rebrand that triggered 72 hours of digital chaos.

During this window, automated bots sniped the original social handles, and Steinberger accidentally renamed his personal GitHub account, allowing digital scavengers to hijack his identity. The chaos included the "Handsome Molty" incident, where the AI generated a human face on a lobster body, and the launch of fake $16m market-cap crypto tokens. For marketers, this highlights a critical reputational liability: even brilliant open-source projects are subject to the legal gravity of "Big AI" and the predatory speed of social media bots. Deploying "viral" tools means inheriting their chaotic baggage.

PROMPT INJECTION IS THE NEW PHISHING

Perhaps the most significant risk to a professional’s brand is "prompt injection through content." Because Moltbot is "always on" and "always reading," it is susceptible to instructions hidden in untrusted content, such as a malicious URL in an email or hidden text on a website the bot "reads" during a search. This is the true Trojan Horse: the bot isn't hacked by the user, but by the very data it is trying to process.

The risk is compounded by the "ClawdHub" supply chain risk. Researcher Jamieson O’Reilly recently proved this by uploading a "poisoned" skill to the Moltbot library; it was downloaded by developers in seven countries before being flagged. Because there is no moderation process for these plugins, a single "malicious skill" can grant an attacker full remote access.

AI agents tear all of [20 years of OS security] down by design... The value proposition requires punching holes through every boundary we spent decades building. When these agents are exposed to the internet... attackers inherit all of that access. The walls come down.

Jamieson O'Reilly, Founder of Dvuln.

THE VERDICT: INNOVATION VS. ENDPOINT TRUST

Moltbot represents a brilliant glimpse into the future of productivity, but its current security posture relies on an outdated model of endpoint trust. It lacks the encryption-at-rest and containerisation necessary for safe corporate deployment. Before you or your team deploy agentic tools in your marketing programmes, ask these three questions:

The Marketer’s Agentic AI Checklist

• The Silo Test: Is this running on a machine that contains sensitive corporate assets, SSH keys, or password managers?

• The Blast Radius: If the bot is tricked by a malicious web search or WhatsApp message, does it have the system-level permission to exfiltrate your entire contact list or codebase?

• The Plugin Pedigree: Does your team have a standardised process for vetting "skills" from unmoderated libraries like ClawdHub?

The time saved on administrative tasks is a powerful lure, but leaders must weigh that gain against the risk of creating a "goldmine for the global cybercrime economy." The future of AI is agentic, but it must be built on "Least Privilege" principles, not total system access.